Software projects don’t always deliver on time or deliver what’s wanted; according to the Standish Group only 31% of software projects are successful, and of these successful projects only 46% return high value…

Fundamentally testing as an activity is about finding and preventing problems that will affect the quality of what you’re delivering. This isn’t always about plain bug hunting or automated test suites, it’s about smoothly managing a project because when projects slip, as they do, quality suffers.

Generally we use RAG statuses, Red, Amber, Green, to measure how a project is running. But what do these mean? Red, it’s late, Amber it might be late, Green it’s going OK? Or something like that; to be honest I’m not entirely sure because everyone’s take is different. Of course, RAG statuses should be clearly defined at project inception however this isn’t always the case. As I frequently say, if everything that should happen did happen I wouldn’t have had a twenty five year career in testing.

So completely tongue in cheek I’d like to suggest a different taxonomy on how we look at project status based on a hierarchy of military terms. I’ll also suggest what test management should be doing for SNAFU and FUBAR projects. When it comes to a clusterfudge you’re on your own!

SNAFU (Situation Normal All Fouled Up)

This gentleman is Private SNAFU. He featured in a series of cartoons made from 1943 to 1945 by the US Army Airforce First Motion Picture Unit. The cartoons showed what happened when service personnel didn’t follow regulations .The majority of the cartoons were written by one Theodor Geisel who you might of heard of by his more well known writing name, Dr Suess.

Organisations are made of people, and because of this they can be surprisingly functionally messy. When I was young I used to love blooper programmes on TV as they sowed that behind the slick and polished exterior of the TV screen things went wrong on a regular basis.

This the base state of projects. Things don’t run smoothly and there are a series of low level issues to overcome in order to deliver on time.

What should test management be doing?

This is where test management should be making sure that potential project issues have been anticipated and mitigation measures are ready. For me a key element in tackling SNAFU is planning, particularly in keeping up to date RAID logs.

However these need to revisited regularly; as the old adage goes “No plan survives contact with the enemy”. Any plan, including your RAID logs, that is created in a changing environment must routinely adjusted and assessed.

FUBAR (Fouled Up Beyond All Recognition)

It appears this phrase came from the USMC in World War 2 and then was popularised, if that is the correct word, during the US Vietnam conflict. Which when all is said done was pretty FUBARed from the American perspective.

In my taxonomy FUBAR is for projects that are going off the rails. In conventional RAG status these would either be in the Amber or Red.

What should test management be doing?

When trying to turn around a project that is FUBAR realism is key. Testing is unlikely to be the reason it’s shifting right testing but it will be a key part of getting it back on track. As a tester or test manager you need to provide accurate estimates, not just what people what to hear. It’s a regular occurrence on FUBAR projects to be asked to trim testing estimates for activity occurring at the end, such as UAT or SIT. Fine, you could do that but if you do you’ll just be revisiting timelines later.

Be honest with your stakeholders and don’t be afraid to ask for help. Ultimately our job should be to ensure our organisations survive and thrive so don’t be afraid of talking to project sponsors, keeping the sunk cost fallacy in mind.

Of course, it may be that your project sponsors are so deeply committed to the project that they don’t want to hear what you have to say. That brings us to the next status.

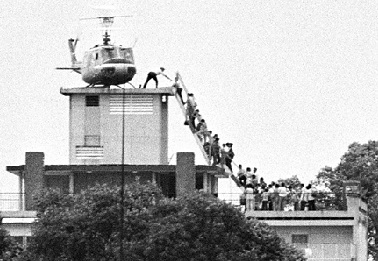

The Clusterfudge; Red and beyond

The “clusterfudge” (please note I’ve sanitised the wording) is where things are now seriously wrong. The photo above is by Hubert van Es and shows the last US helicopter to leave Vietnam in 1975, truly a clusterfudge of a situation from the US perspective.

The etymology of the word is somewhat obscure, but it came about during the 1960s and was popularised during the US Vietnam conflict. One attributed is to beat poet Ed Sanders, however my favoured origin and the one that fits with this talks is that is stems from the Oak Leaf Clusters worn by Majors and Lieutenant Colonels in the US forces. A cluster “fudge” came about when these officers got involved in a FUBAR situation and made it worse.

Stanford Prof Bob Sutton describes a clusterfudge as being made up of a mix of illusion, impatience and incompetence. Illusion, when a decision maker believes a goal is easier than it really is. A current high profile example might be Putin believing that Russian troops could take Kyiv in three days. Impatience, trying to release in a hurry or within false time frames. Incompetence, where leaders don’t have technical competence and aren’t willing to listen to experts.

Hopefully your projects will be more SNAFU than FUBAR; chaos and churn are part of life, it’s our job as test professionals to look for problems before they happen and have contingencies in place to deal with them.